27: CIPS Explained

Image Generators with Conditionally-Independent Pixel Synthesis by Anokhin et al. explained in 5 minutes.

⭐️Paper difficulty: 🌕🌕🌑🌑🌑

🎯 At a glance:

Generative models have become synonymous with convolutions and more recently with self-attention, yet we (yes, I am the second author of this paper, yay 🙌) ask the question: are convolutions REALLY necessary to generate state-of-the-art quality images? Perhaps surprisingly a simple multilayer perceptron (MLP) with a couple of clever tricks does just as good (if not better) as specialized convolutional architectures (StyleGAN-2) on 256x256 resolution.

⌛️ Prerequisites:

(Highly recommended reading to understand the core ideas in this paper):

🔍 Main Ideas:

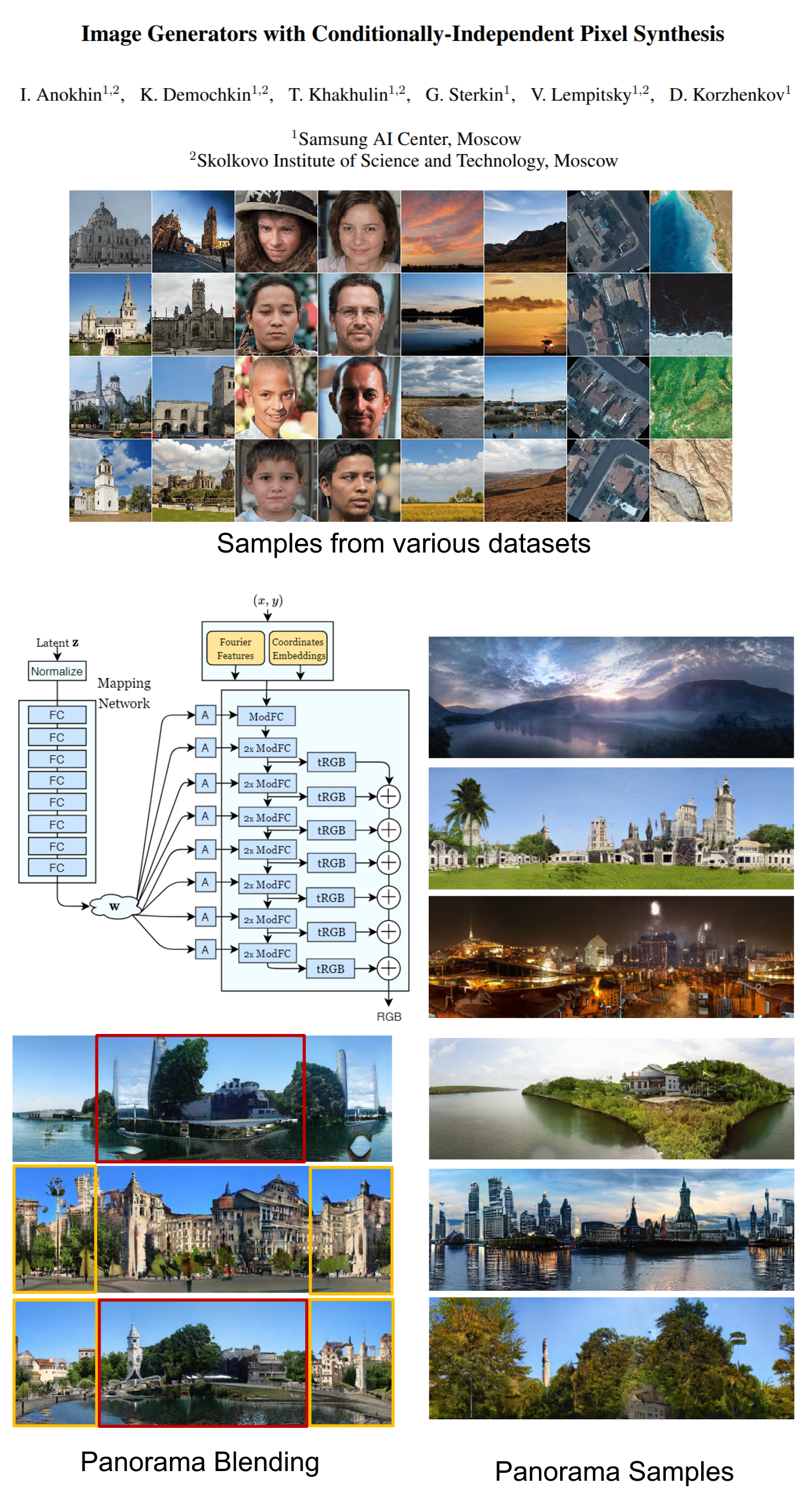

1) Multilayer Perceptron generator:

The main idea is to use an MLP that independently predicts rgb values of each pixel from its coordinates and a latent vector. To achieve this, we started by taking the StyleGAN2 generator, changing the kernel sizes of all convolutions from 3x3 to 1x1, which is the same as using an MLP, and removing all upsampling operations. Instead we use a 256x256 coordinate grid in place of the 4x4 constant input from StyleGAN2. We believe this helps model the long range dependencies crucial to high quality image synthesis without sharing information between adjacent pixels. The discriminator was kept unchanged.

2) Positional encoding:

We quickly found that simply using a coordinate grid projected to 512-dimentional space via fourier features (several sines and cosines of various frequencies evaluated at each of the coordinates) was not enough as we kept seeing wave-like artifacts on images, hence we additionally use learnable coordinate embeddings that act as constant input and vary at each pixel location. We found these coordinate embeddings to be vital for improving high-frequency features on the synthesized images.

3) Cylindrical panoramas:

One of the interesting consequences of generating pixels independently is that it is possible to use non-rectangular coordinate grids. For example, we show that by learning on crops from a cylindrical coordinate grid during training CIPS is able to synthesize full seamless cyclical panoramas at test times. Moreover, by interpolating between two different latent codes on the opposite sides of the cylindrical grid CIPS is able to smoothly blend the content from two different panoramas.

📈 Experiment insights / Key takeaways:

- We beat StyleGAN2 on LSUN churches

- We are almost as good in terms of FID on FFHQ and Landscapes

- We got a higher FID on two custom satellite datasets, due to CIPS’ rotation invariant 1x1 kernels, since the images in these datasets are randomly rotated up to 360 degrees during training

- CIPS does not have artifacts in the high-frequency range of the generated image spectrum present in StyleGAN

- CIPS can be used for foveated rendering, where pixels are sampled more densely closer to the middle of the image

🖼️ Paper Poster:

✏️My Notes:

- (4/5) for the name. It looks slick, but even though I am the second author of the paper I am still not sure how to correctly pronounce CIPS 😅

- Spatially varying styles are awesome for latent image inversions, they keep way more detail on reconstructed images than StyleGAN

- CIPS only uses about 4 times the operations of StyleGAN

- CIPS can be trained on patches

- CIPS can be finetuned in 1024x1024 resolution

- CIPS with demodulations is insanely memory-hungry since for each unique style used you have to keep a copy of the entire model in memory, which is necessary to use spatial styles

🔗 Links:

CIPS arxiv / CIPS github <- please give us a star on GitHub if you liked the paper! 😊️️

👋 Thanks for reading!

Join Patreon for Exclusive Perks!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!