35: DNR Explained

Deferred Neural Rendering: Image Synthesis using Neural Textures by Justus Thies et al. explained in 5 minutes.

⭐️Paper difficulty: 🌕🌕🌕🌕🌑

The concepts are not hard, but the paper is just really wordy

🎯 At a glance:

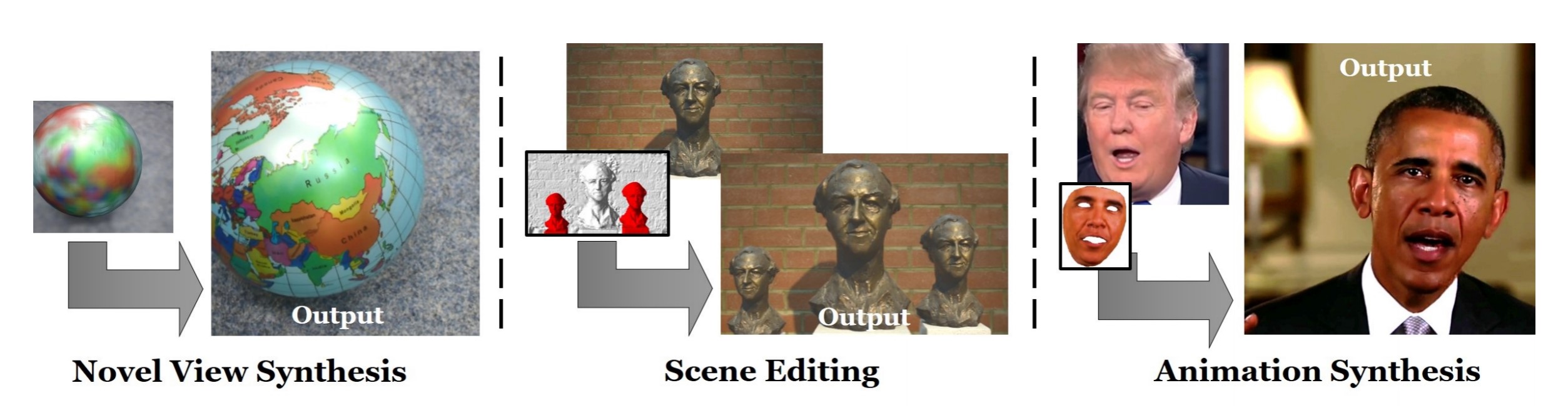

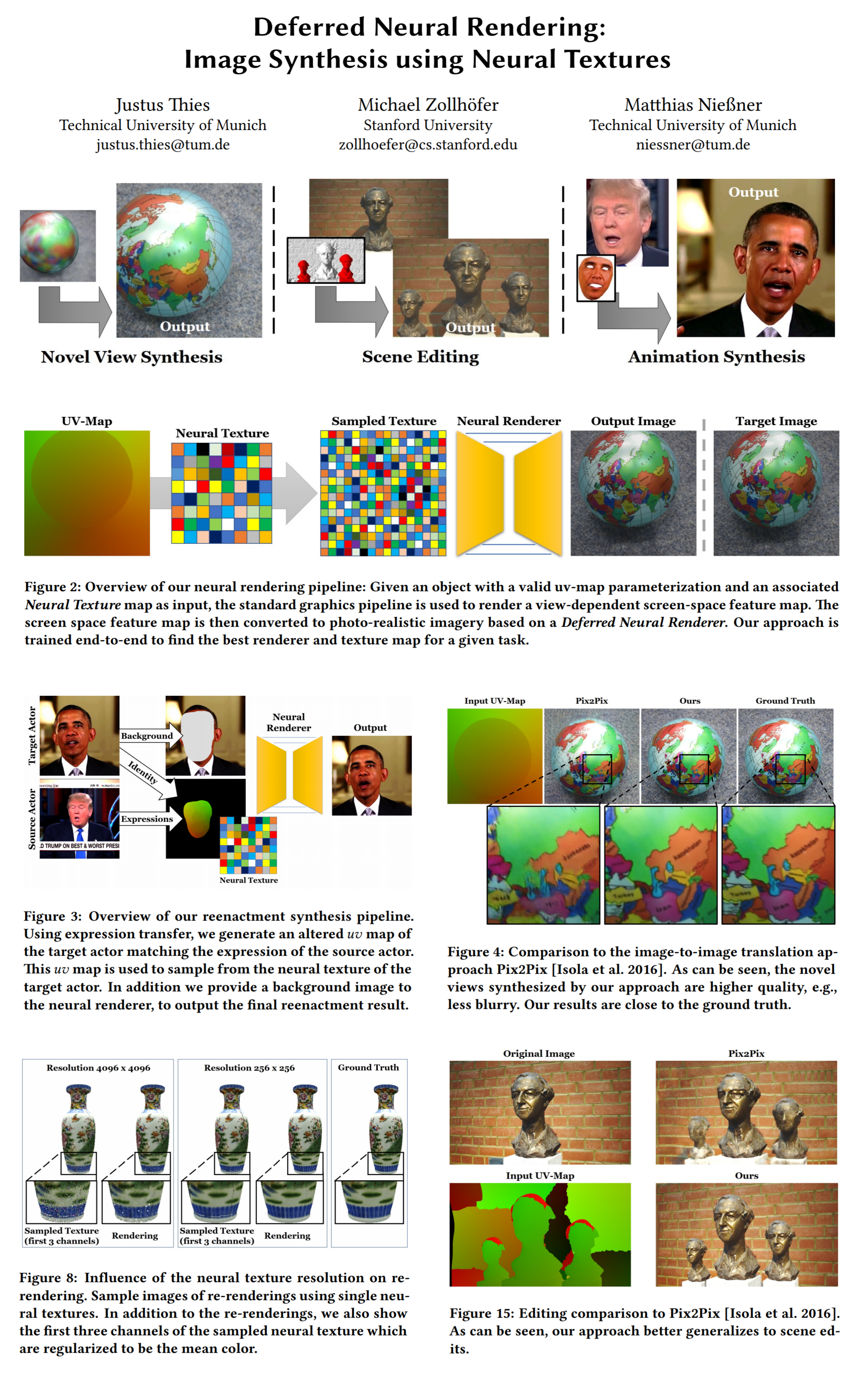

How can we synthesize images of 3d objects with explicit control over the generated output with only limited imperfect 3d input available (for example from several frames in a video)? Justus Thies and his colleagues propose a new paradigm for image synthesis called Deferred Neural Rendering that combines the traditional graphics pipeline with learnable components called Neural Textures, which are feature maps stored on top of 3d mesh proxies. The new learnable rendering pipeline utilizes the additional information from the implicit 3d representation to synthesize novel views, edit scenes, and do facial reenactment at state-of-the-art levels of quality.

⌛️ Prerequisites:

(Highly recommended reading to understand the core contributions of this paper):

None 🤷♂️

🔍 Main Ideas:

1) Overview:

Essentially the proposed pipeline consists of a high-dimensional texture that is sampled according to a UV map of a target view to produce a feature map of the desired resolution. This feature map is processed by a convolutional U-Net-like model to synthesize the final RGB image.

2) Neural Textures:

This is just a learnable 16 channel tensor of higher resolution than the target image. Since it is hard to know beforehand what resolution is high enough for the neural texture to store all the fine details a hierarchical scheme is introduced. Instead of a single texture learning all scales of features several textures of various resolutions are learned. Due to the regularization of the features in the higher-resolution textures, they tend to learn the high-frequency detail, while the low-resolution textures learn the low-frequency features. The textures are sampled hierarchically with bilinear interpolation of coordinates and added together like a Laplacian Pyramid. Note that the sampling process is differentiable and allows the whole pipeline to be trained end-to-end.

3) Deferred Neural Renderer:

The renderer is U-Net that synthesizes an RGB image from an input feature map that is sampled from the Neural Texture Pyramid according to the input UV mapping between points on the 3d mesh and coordinates on the 2d textures.

View-dependent effects are achieved by channel-wise multiplying the input feature map with the first 3 bands of spherical harmonics (9 feature maps obtained from the input view direction), which rotates the feature maps with respect to the view direction.

📈 Experiment insights / Key takeaways:

- The proposed approach outperforms classic IBR methods

- Deferred Neural Rendering (DNR) is able to edit reconstructed scenes from videos

- DNR is able to use a driving video to animate a target face

🖼️ Paper Poster:

✏️My Notes:

- (?/5) no rating for the name since the authors didn’t come up with one 😐

- Wait what, no GAN loss used in training? 🤨

- The pipeline is designed to optimize for one scene at a time (Does a mapper already exist for it?)

- Interesting whether the UV map can be replaced some more sophisticated method of representing the underlying mesh?

🔗 Links:

DNR arxiv / DNR github Unofficial

👋 Thanks for reading!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Join Patreon for Exclusive Perks!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!