28: SimSwap Explained

SimSwap: An Efficient Framework For High Fidelity Face Swapping by Renwang Chen et al. explained in 5 minutes.

⭐️Paper difficulty: 🌕🌕🌑🌑🌑

🎯 At a glance:

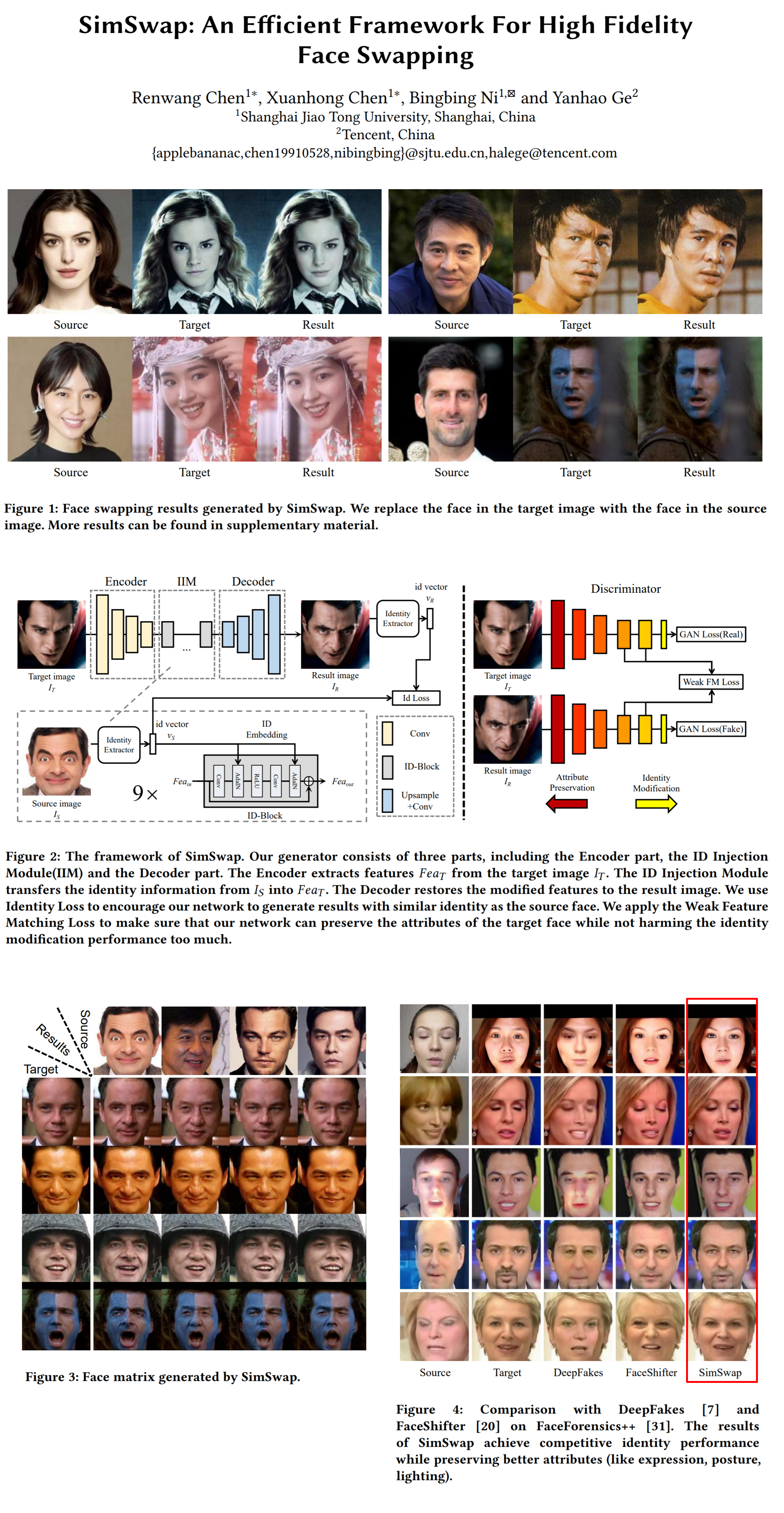

FaceSwap apps have been around for ages, hence you might be thinking that swapping the faces of two people is trivial but in reality is far more complicated. The authors from Tencent suggest that the existing approaches are limited in two main ways: they cannot either generalize to arbitrary faces or fail to preserve attributes like facial expression and gaze direction. The proposed method - SimSwap leverages a new ID Injection module and the Weak Feature Matching Loss that aim to solve both of the aforementioned issues.

⌛️ Prerequisites:

(Highly recommended reading to understand the core ideas in this paper):

🔍 Main Ideas:

1) Limitations of the DeepFakes: Due to the nature of the model (encoder and two identity specific decoders) and the training procedure, the encoder features contain the identity and attribute information of the target face, yet the decoder can convert the target’s features to the source identity, which means that the identity information is stored in the decoder’s weights. That is why it cannot generalize to an arbitrary person.

2) ID Injection Module: Seeking a way to separate the identity information from the decoder’s weights the authors propose an ID Injection Module between the encoder and the decoder. This module first extracts an identity vector using a face recognition network and then uses this information to inject the identity information into the encoder features via AdaIN layers. The PatchGAN discriminator is used to improve the quality of the generated images.

3) Weak Feature Matching Loss: Just replicating the source identity is not enough to create a face swap. It is also required to keep various attributes such as the expression, position, lightning, etc from the target image. The authors use a variation of the feature matching loss for exactly this reason. The idea of the feature matching loss originated in Pix2PixHD which used the L1 norm between the discriminator features extracted at multiple layers from the ground truth and the generated images. Since there is no ground truth in the face-swapping task, the authors only use the last few layers of the discriminator for the loss since that is where most of the attribute information is contained. The overall objective is comprised of identity, adversarial, weak feature matching, and reconstruction losses.

📈 Experiment insights / Key takeaways:

- The model was trained on images of size 224x224

- The qualitative results in the paper blow the baselines out of the water

🖼️ Paper Poster:

✏️My Notes:

- (4/5) for the name, I guess SimSim was already taken, and this was the next best thing

- IMHO the teaser image is quite poorly chosen, I can barely tell the difference between the target and result images

- Surprisingly there is no mention of training the model at higher resolutions such as 512x512 or 1024x1024

- There are examples of video face-swapping that look really neat in the code repository (and a little bit in the appendix), however they are not discussed in the paper.

- Have you dabbled with DeepFakes before? Let me know in the comments!

🔗 Links:

SimSwap arxiv / SimSwap github

👋 Thanks for reading!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Join Patreon for Exclusive Perks!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!