30: GFP-GAN Explained

Towards Real-World Blind Face Restoration with Generative Facial Prior by Xintao Wang et al. explained in 5 minutes.

⭐️Paper difficulty: 🌕🌕🌑🌑🌑

🎯 At a glance:

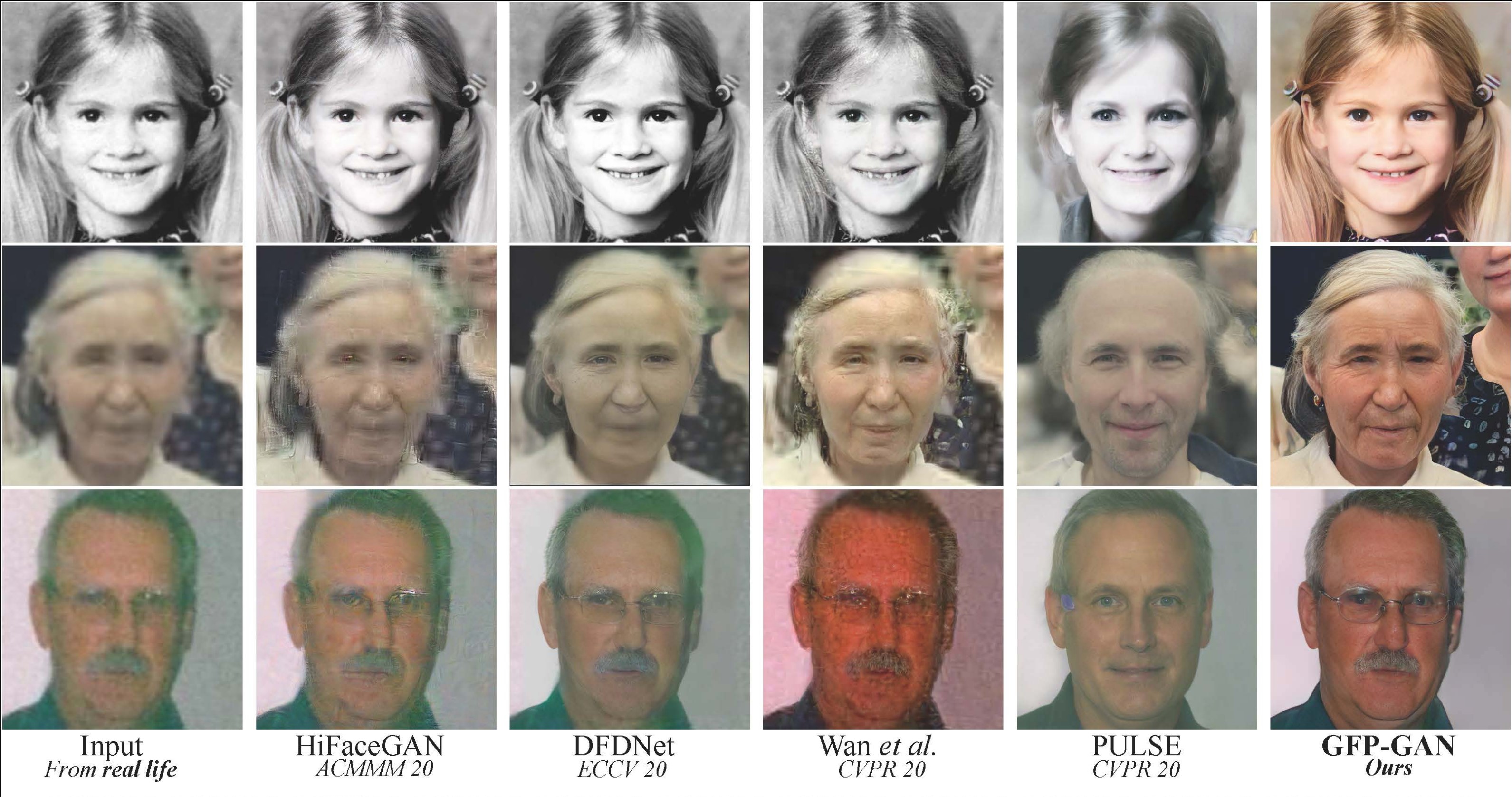

Have you ever tried restoring old photos? It is a long tedious process since the degradation artifacts are complex, and the poses and expressions are diverse. Luckily the authors from ARC Tencent came up with GFP-GAN - a new method for real-world blind face restoration that leverages a pretrained GAN and spatial feature transform to restore facial details with a single forward pass.

⌛️ Prerequisites:

(Highly recommended reading to understand the core ideas in this paper):

🔍 Main Ideas:

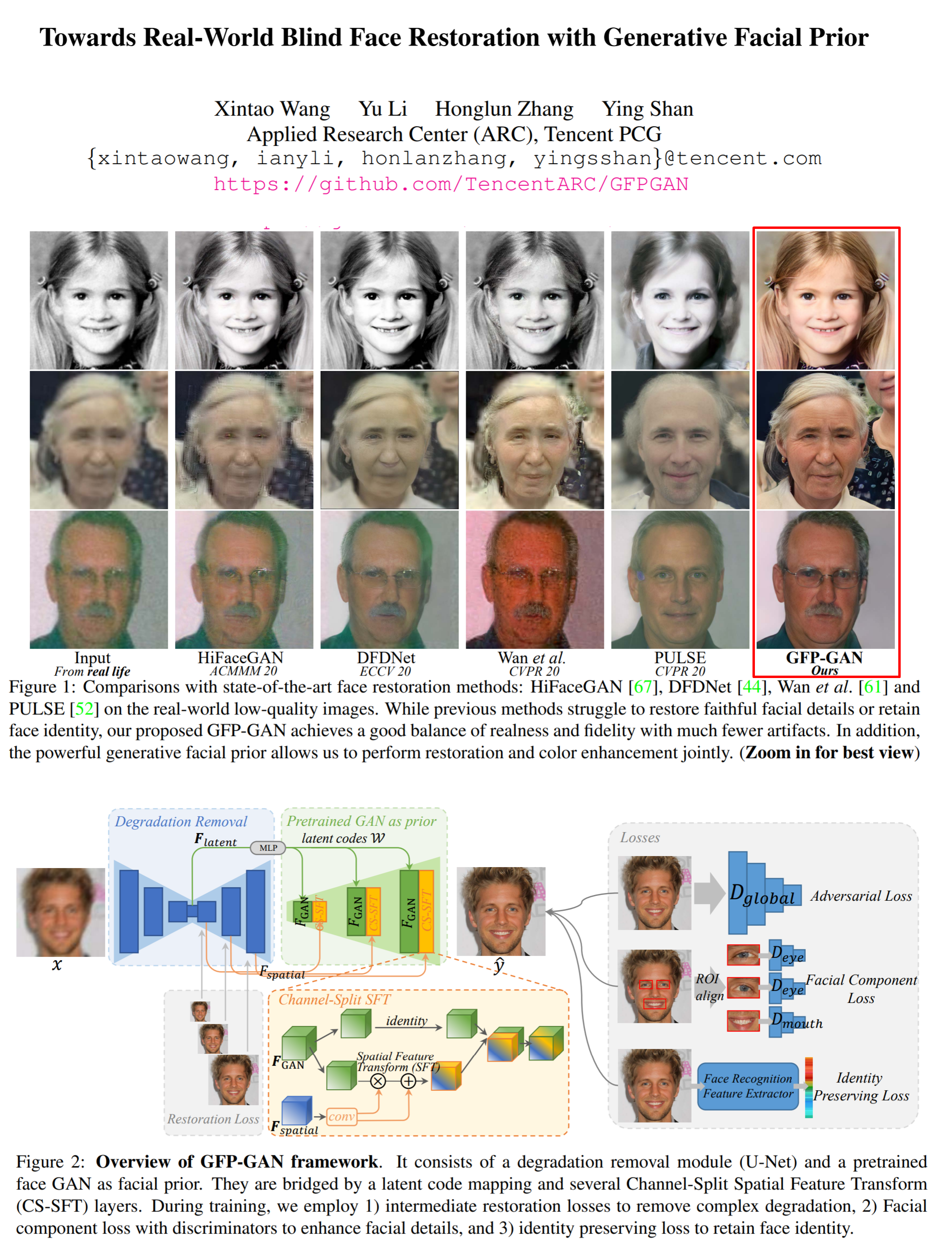

1) Architecture overview: The proposed framework starts with a U-Net degradation removal module that aims to remove degradations (duh) and extract latent features for mapping the image to the closest StyleGAN-2 latent code as well as a set of multi-resolution spatial features for modulation of StyleGAN-2 intermediate feature maps. The predicted features are then used in a coarse-to-fine manner to synthesize the restored image.

2) Degradation removal module: A vanilla U-Net, except that the authors use L1 loss between the restored images at each resolution scale in the U-Net and the ground-truth image pyramid. The latent features are taken from the bottleneck layer, and the spatial features are obtained from the decoder part of the U-Net.

3) Generative Facial Prior: The latent features from the bottleneck layer of the U-Net are transformed by several MLPs (one per generator layer) to style vectors. The generator does not use the style vectors to output the RGB image right away. Instead, it produces intermediate convolutional features that can be further modulated by the spatial features.

4) Channel-Split Feature Transform: the spatial features are used to predict affine transform parameters (mean and std) that are used to modulate the feature maps in the generator by scaling and shifting them (just like AdaIN in the first StyleGAN). Interestingly the modulation is performed only on some of the channels, letting the rest of the features pass through unchanged

5) Model Objectives: The loss is as follows: L1 & perceptual reconstruction loss, adversarial loss, ID loss (ArcFace), and face component loss, which is obtained from several small local discriminators trained on patches of eyes, mouth, etc.

📈 Experiment insights / Key takeaways:

- All images are 512x512

- The samples are on the next level compared to the baselines (no, seriously, check out the attached image)

- They can restore images with closed eyes! (this is surprisingly non-trivial, I tried 😂)

- Only synthetic data is used during training, hence some artifacts appear on images that are unlike the synthetic data

🖼️ Paper Poster:

✏️My Notes:

- (3/5) for the model name; nothing special 🤷♂️

- Sucks that the local component loss limits this approach to just the facial domain 😕

- I do not know, how much the samples are curated but dang, they just destroy all competition! 🤯

- Another paper in the “Spatial Features” trend. This time on a pretrained StyleGAN-2, which is even more interesting

🔗 Links:

GFP-GAN Arxiv / GFP-GAN github

👋 Thanks for reading!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Join Patreon for Exclusive Perks!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!