33: GRAF Explained

GRAF: Generative Radiance Fields for 3D-Aware Image Synthesis by Katja Schwarz et al. explained in 5 minutes.

⭐️Paper difficulty: 🌕🌕🌕🌑🌑

🎯 At a glance:

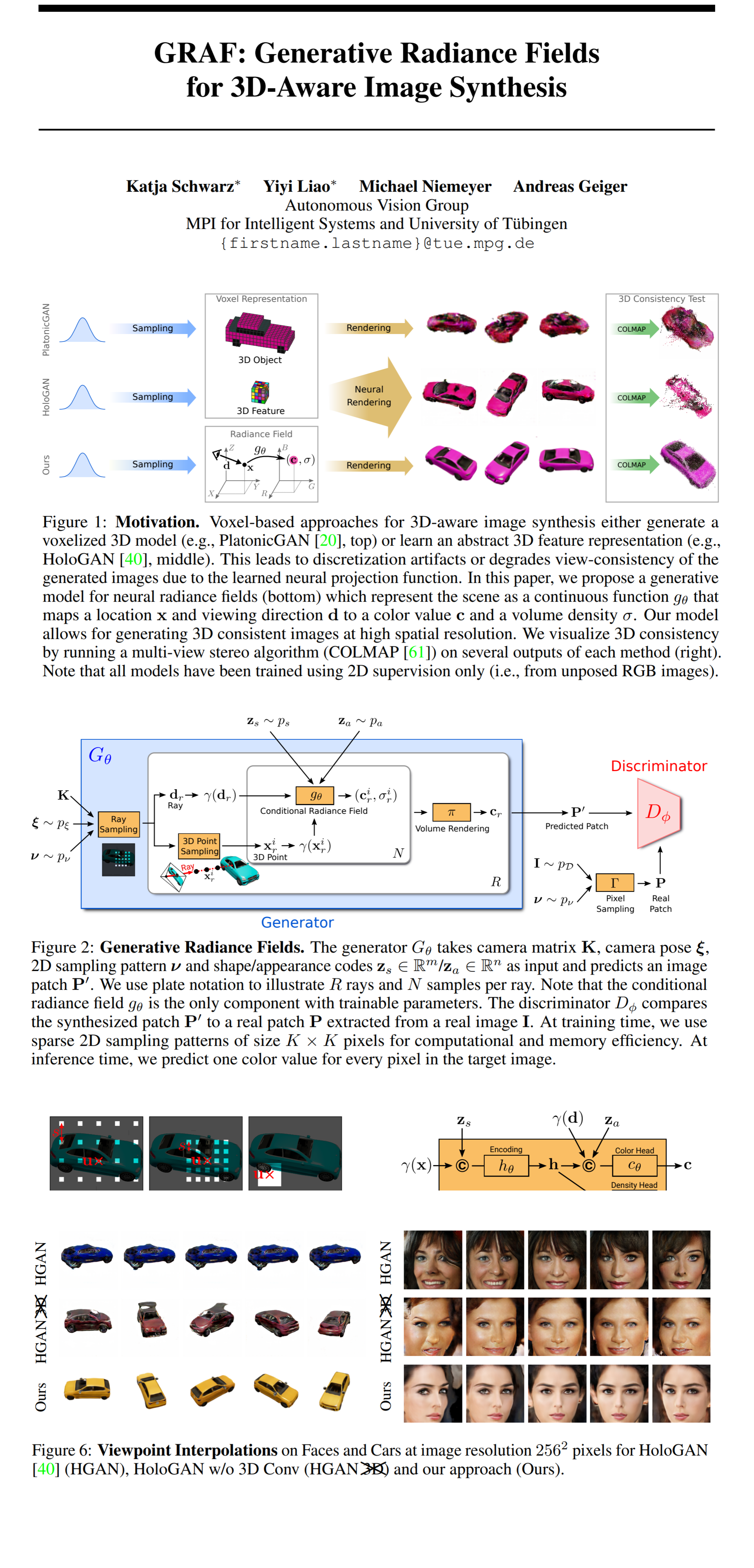

NeRF models blew up last year spawning an endless stream of variations and modifications addressing important issues with the original design. One of the more unique ideas that came from this NeRF Explosion (coined by Frank Dellaert) is this paper by researchers from the Max Planck Institute for Intelligent Systems. The authors of GRAF combined NeRFs and GANs to design a pipeline for generating conditional Neural Radiance Fields that can generate consistent 3d models with various shapes and appearances despite only being trained on 2d unposed images.

⌛️ Prerequisites:

(Highly recommended reading to understand the core contributions of this paper):

🔍 Main Ideas:

1) Motivation:

Voxel-based 3d generators suffer from discretization artifacts, whereas implicit rendering methods operate on continuous representations. Hence, these approaches generate more consistent 3d shapes. NeRFs are traditionally learned one per scene, which is a very computationally expensive task. GRAF, on the other hand, can learn to generate a whole class of diverse objects by conditioning the underlying NeRF on two latent vectors representing shape and appearance.

2) Generative Radiance Field:

The generator takes camera matrix (intrinsics), camera pose, sampling pattern (determines the location and scale of the image patch), and shape/appearance codes as input and predicts an image patch because predicting the entire image is too expensive during training. The camera poses are uniformly sampled from the upper hemisphere around the object. The rays are sampled in a patch according to a pattern that depends on the center of the patch and the distance between adjacent rays thus enabling the use of a resolution free convolutional discriminator.

For each sampled ray the generator uses an MLP to first predict the shape encoding from the positional encoding and the shape code, and the transform them to a volume density value. This value is computed independently of the viewpoint and the appearance code to encourage multi-view consistency and disentangle shape and appearance.

Color at each pixel location in the patch is predicted by an MLP from the shape encoding, positional encoding and appearance code.

The discriminator is from PatchGAN with the difference that it samples patches of various resolutions.

📈 Experiment insights / Key takeaways:

- 3 synthetic and 3 real world datasets are considered

- baselines used are: PlatonicGAN and HoloGAN

- FID is significantly better than both baselines

- it is harder for HoloGAN to accurately capture the appearance of objects from different viewpoints due to its low-dimensional 3D feature representation and the learnable projection

- Voxel based models do not scale to high resolution very well unlike GRAF

- Learned projections should generally be avoided

- Sampling patches at random scales is crucial for robust performance

🖼️ Paper Poster:

✏️My Notes:

- (4/5) This sets up one of the funniest paper names (wait for the next post)

- I still cannot wrap my mind around the fact that the generator learns to generate diverse coherent 3d shapes without ever seeing any 3d objects during training.

- This is an important paper to cover before going for its direct follow-up that won CVPR best paper

- The shapes learned are fairly simple, but wait for the follow-up paper!

🔗 Links:

👋 Thanks for reading!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Join Patreon for Exclusive Perks!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!