36: SimCLR Explained

A Simple Framework for Contrastive Learning of Visual Representations by Ting Chen et al. explained in 5 minutes or less.

⭐️Paper difficulty: 🌕🌑🌑🌑🌑

This paper is very easy to understand!

🎯 At a glance:

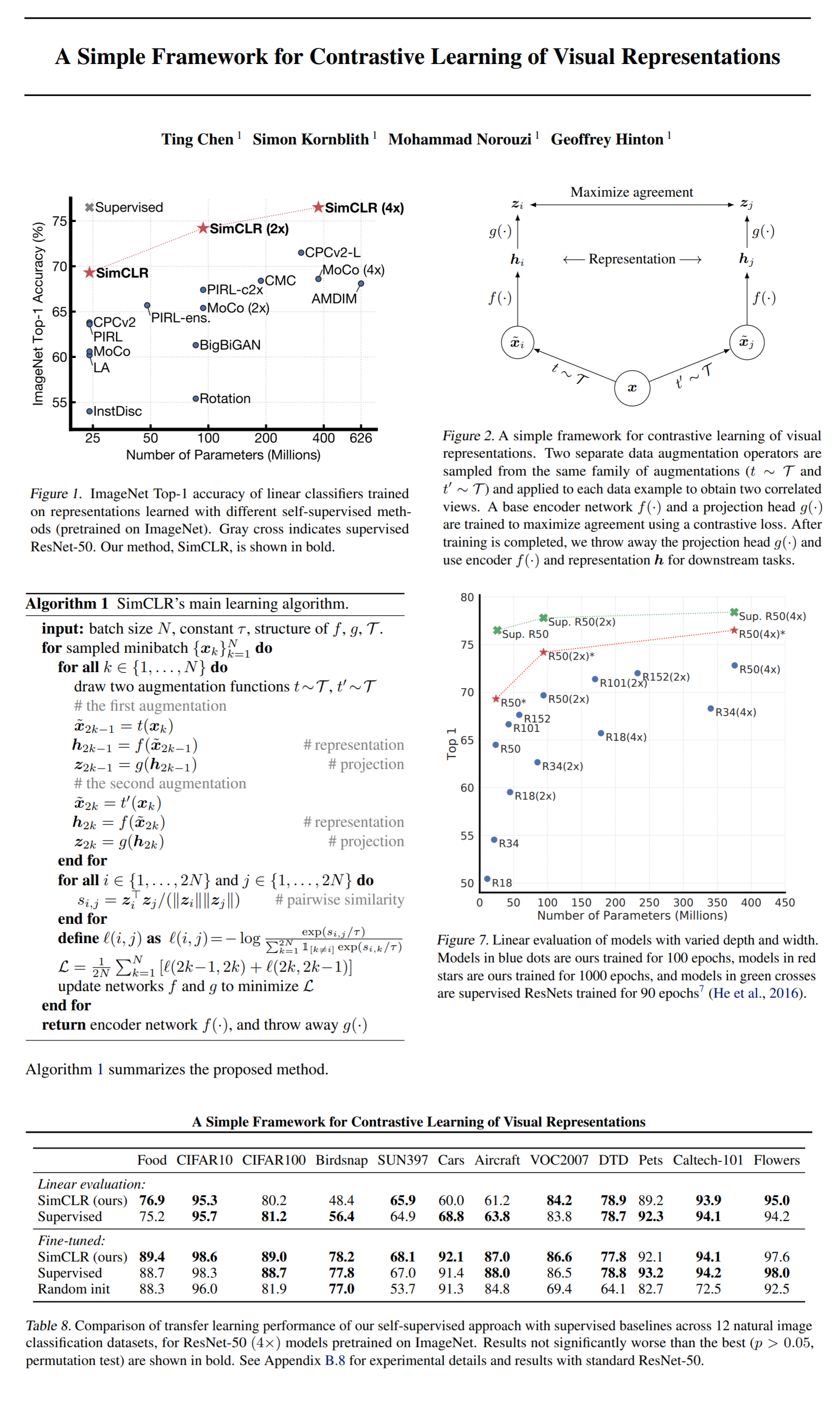

Is it possible to learn good image representations for many downstream tasks at once? The authors of SimCLR propose a simple framework for contrastive self-supervised algorithms that is able to learn useful representations. They report several crucial findings: 1) The composition of data augmentations plays a critical role in learning effective representations 2) Introducing a learnable nonlinearity between the representations and the contrastive loss substantially improves the quality of the learned representations 3) Contrastive learning benefits from larger batch sizes and more steps compared to supervised learning. The proposed approach outperforms previous self-supervised approaches, and matching the performance of ResNet-50 on ImageNet

⌛️ Prerequisites:

(Highly recommended reading to understand the core contributions of this paper):

This is the first post in a series about self-supervised learning. Subscribe to get notified when the posts come out!

🔍 Main Ideas:

0) The Contrastive Learning Framework:

The main idea of SimCLR is to learn representations that maximize agreement between differently augmented views of the same image via a contrastive loss. SimCLR comprises four components:

1) Stochastic data augmentation:

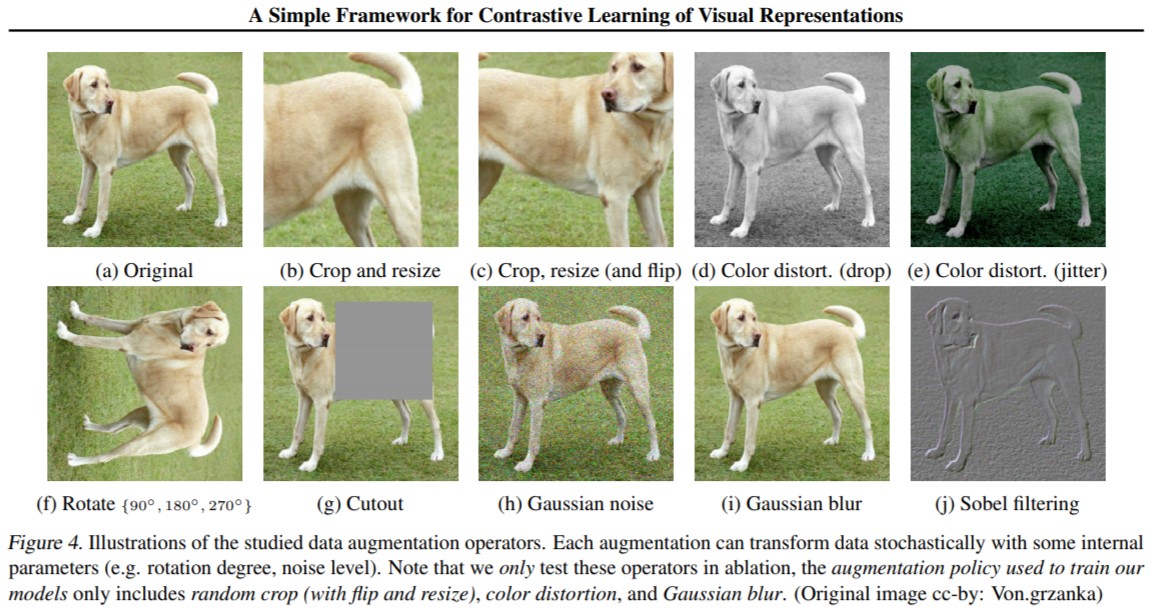

Three augmentations are applied sequentially to an image to generate two correlated views that are considered a positive pair: random cropping with resize to the original size, random color distortions, and random Gaussian blur.

2) Base encoder:

A ResNet that extracts representation vectors from augmented data samples. The output is take after the average pooling layer.

3) A small neural network projection head:

An MLP with one hidden layer with a ReLU nonlinearity maps representations to the contrastive loss space.

4) A contrastive loss function:

Given a set of representations and a target view the contrastive prediction task aims to identify the positive pair for the target view among the input set of augmented views. The loss is defined as the normalized temperature-scaled cross entropy loss with cosine similarities between representations as scores.

5) Negative pair sampling:

A minibatch of images is sampled and positive pairs are constructed from augmented views. The rest of the samples are considered negative pairs. The batch size used is really big, specifically it varies from 256 to 8192 (this is 16382 negative examples per positive pair). LARS optimizer is used for more stable training.

📈 Experiment insights / Key takeaways:

- No single augmentation is enough to learn good representations

- Stronger color augmentations benefit SimCLR compared to supervised learning

- Surprise! (No) Bigger and wider variations of SimCLR increase performance

- For downstream tasks 1-10% of the labels from ImageNet are used to fine-tune the entire network without regularization

- Fine-tuning on the entire ImageNet is better than training from scratch

🖼️ Paper Poster:

✏️My Notes:

- (3/5) kind of an average model name, but at least it makes sense

- Wow, my first self-supervised contrastive paper in this channel! Turns out the ideas are pretty straight forward and the paper is very well written

- I am going to cover 2-3 more papers on the topic of self-supervision in the next couple of posts. Stay tuned!

- I omitted the appendix, go check it out for more details on additional experiments, augmentation choices, etc.

🔗 Links:

👋 Thanks for reading!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Join Patreon for Exclusive Perks!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!