32: PTI Explained

Pivotal Tuning for Latent-based Editing of Real Images by Daniel Roich et al. explained in 5 minutes.

⭐️Paper difficulty: 🌕🌑🌑🌑🌑

🎯 At a glance:

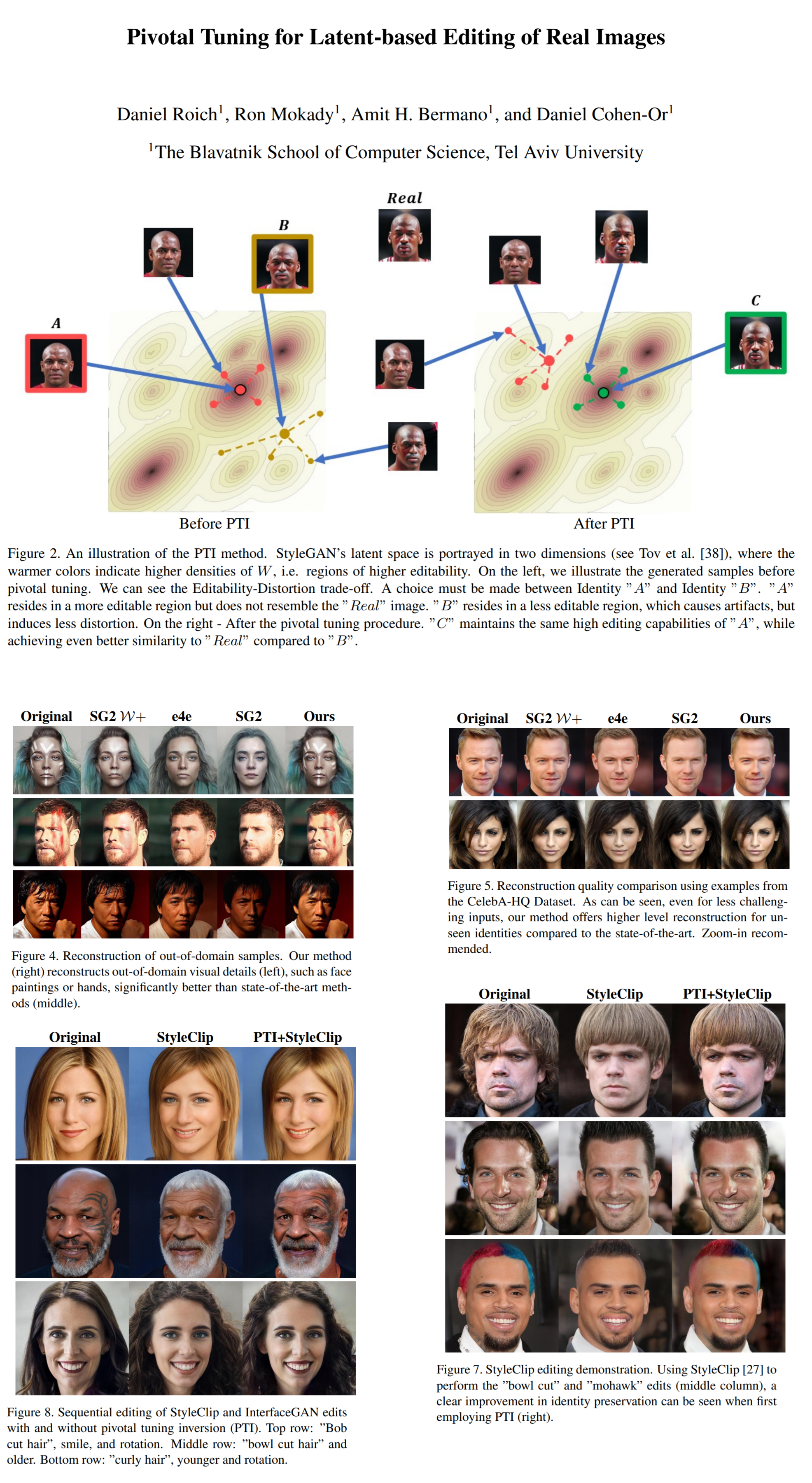

Recently multiple new StyleGAN2 inversion techniques were proposed, however, they all suffer from the inherent editability/reconstruction tradeoff meaning that reconstructions with perfect identity preservations fall outside of the generator’s well-defined latent space which hinders editing. On the other hand, reconstructions that are well suited for edits tend to have a significant identity gap with the person on the target photo. Daniel Roich and his colleagues from Tel Aviv University propose a simple yet effective two-step solution: first, fit a vector that reconstructs the image well, and then use it as a pivot to fine-tune the generator so that it reconstructs the input image almost perfectly while retaining all of the editing capabilities of the original latent space.

⌛️ Prerequisites:

(Highly recommended reading to understand the core contributions of this paper):

🔍 Main Ideas:

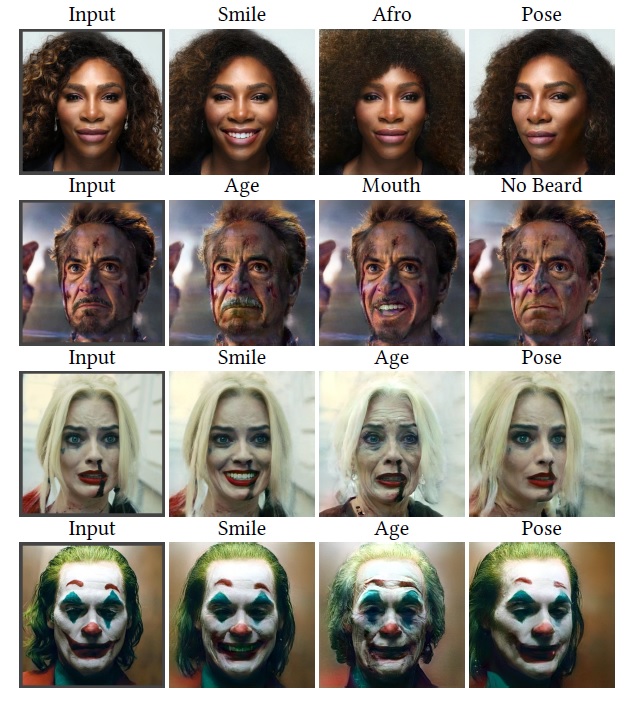

1) Inversion: Authors say it doesn’t really matter how you get the latent code, and simply use direct latent optimization via gradient descent of the style vector “w” (not “w+” since they want the best editability) in most of their experiments (they use an e4e based vector for StyleCLIP experiments since that is the type of input that StyleCLIP was trained with). Interestingly, a noise vector is fitted along with the style vector but the authors claim that it helps with the reconstruction process but does not play a significant part once a suitable style vector is found due to the noise regularization term. The latent code is optimized with just an LPIPS reconstruction loss and an L1 noise regularization.

2) Pivotal Tuning: The latent code from step one will produce a reconstruction similar to the target image but with significant distortion also called an identity gap. To bring the reconstruction closer to the target image the generator is fine-tuned so that the image it produces from the pivot latent code matches the target image more closely. At this stage, LPIPS and L2 losses between the two images are used. This step is described as “shooting a dart and then shifting the board itself to compensate for a near hit”.

3) Locality Regularization: To ensure that the latent space retains its semantic properties necessary for editing inverted images during step 2 a special regularization technique is used. At each iteration, a random style code sampled from the mapping network (which remains unchanged) is interpolated with the pivot. This interpolated code is used to generate two images: one with the original StyleGAN weights, and one with the fine-tuned. The LPIPS and L2 losses between the two images are used to regularize the generator so that it localizes the changes to the latent space only around the pivot keeping the rest of it mostly intact to allow for semantic editing later on.

📈 Experiment insights / Key takeaways:

- It is possible to use PTI for multiple images at once

- PTI straight up destroys the baselines in all qualitative comparisons

- Quantitative metrics show that PTI alleviates the distortion-editability trade-off

- The whole procedure takes less than 3 minutes per image on a single 2080 GPU

- The next step would be to develop a mapper that approximates the necessary update to the generator’s weights in the second phase with a single forward pass

🖼️ Paper Poster:

✏️My Notes:

- (3/5) for a boring-ass name

- It is insane how bad the e4e samples look here compared to PTI samples, yet in the e4e papers everything looked really good.

- I think the most surprising finding is that the editing directions and mappers from StyleCLIP work out-of-the-box without any additional fine-tuning

🔗 Links:

👋 Thanks for reading!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Join Patreon for Exclusive Perks!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!