21: GANcraft Explained

GANcraft: Unsupervised 3D Neural Rendering of Minecraft Worlds by Zekun Hao et al. explained in 5 minutes.

⭐️Paper difficulty: 🌕🌕🌕🌑🌑

🎯 At a glance:

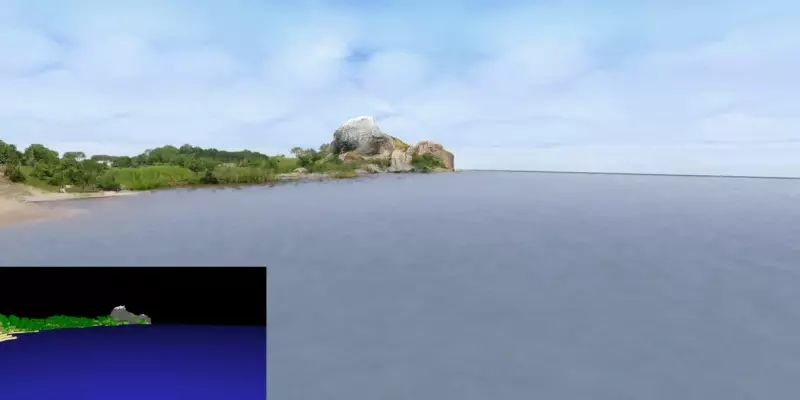

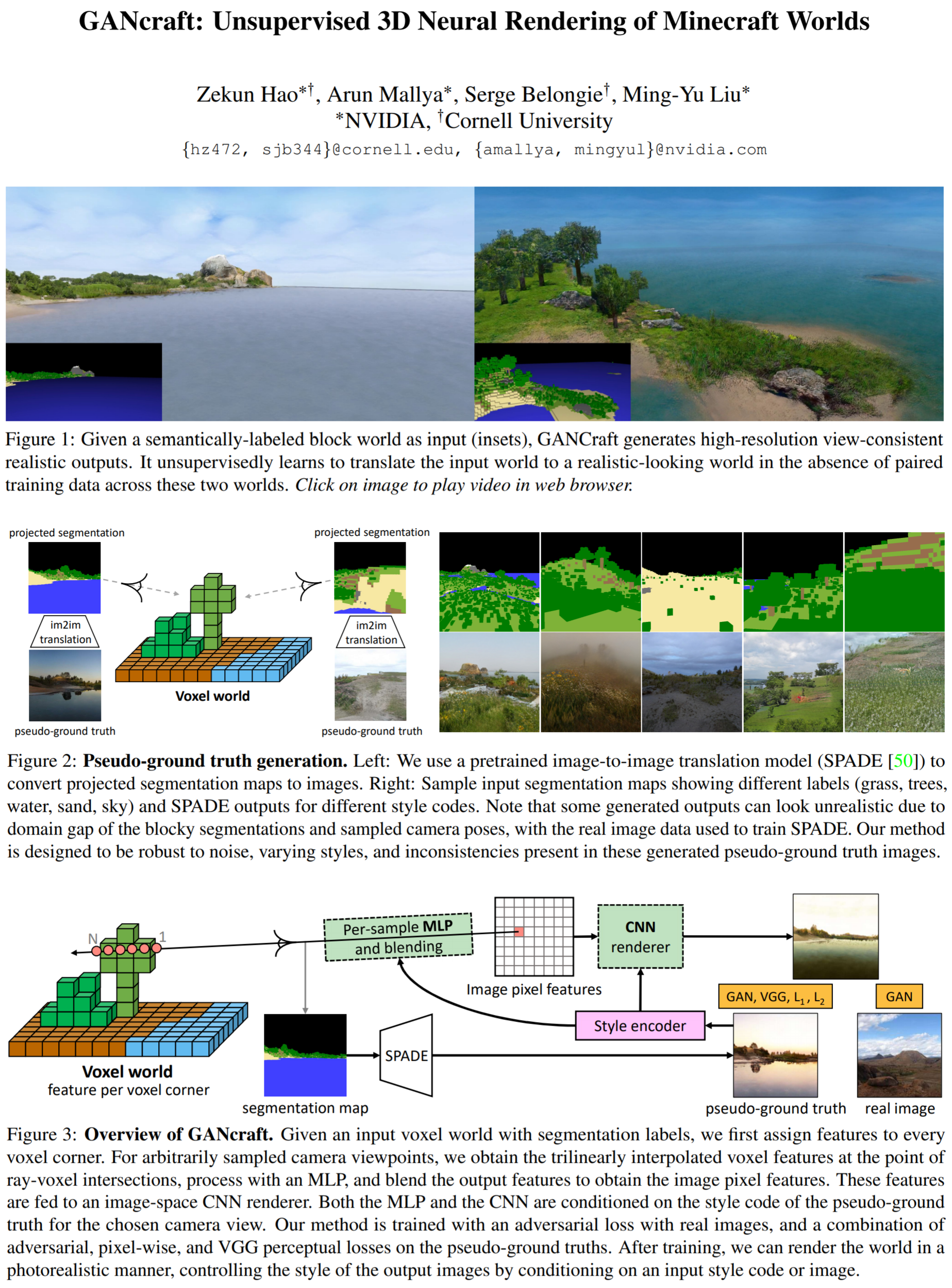

Did you ever want to quickly create a photorealistic 3d scene from scratch? Well, now you can! The authors from NVidia proposed a new neural rendering model trained with adversarial losses …WITHOUT a paired dataset. Yes, it only requires a 3D semantic block world as input, a pseudo ground truth image generated by a pretrained image synthesis model, and any real landscape photos to output a consistent photorealistic render of a 3D scene corresponding to the block world input.

⌛️ Prerequisites:

(Highly recommended reading to understand the core ideas in this paper):

1) NeRF

🔍 Main Ideas:

1) Generating training data:

For each training iteration a random camera pose from the upper hemisphere of the scene is sampled along with a focal length value. The camera is used to project the block world to a 2d image to obtain a semantic segmentation mask. The mask along with a random latent code is passed to a pretrained SPADE network that generates the pseudo ground truth image corresponding to the sampled camera view of the Minecraft scene.

2) Voxel-based volumetric neural renderer:

In GANCraft the scene is represented by a set of voxels with corresponding semantic labels. There is a separate neural radiance field for each of the blocks, and for points in space where blocks do not exist, a null feature vector with density 0 is returned. To model diverse appearances of the same underlying scene the radiance fields are conditioned on style code Z. Interestingly, the MLP encoder that predicts the style vector is shared amongst all voxels. The location code is derived by computing Fourier features of the trilinear interpolation of learnable codes on the vertices of voxels.

3) Neural sky dome:

The sky is assumed to be infinitely far away, hence it is rendered as a large dome, and its color is obtained from a single MLP that maps a ray direction and a style code to a color value.

4) Hybrid neural rendering:

The rendering is done in two phases, first feature vectors are aggregated along rays for each pixel, and then a shallow CNN with 9x9 receptive field is employed to convert the feature map to an RGB image of the same size.

5) Losses and regularizers:

The authors use L1, L2, perceptual, and adversarial losses on the pseudo ground truth images, and adversarial loss on the real images to train all of the models.

📈 Experiment insights / Key takeaways:

- GANCraft has lower FID and KID then MUNIT and NSVF-W, and almost matches SPADE even though SPADE is not view consistent

- GANCraft is the most view consistent among the baselines

🖼️ Paper Poster:

✏️My Notes:

- 5/5 Awesome paper/model name!

- No idea how they managed to fit all of this into memory. Even though it is mentioned in the paper that the two stage approach helps reduce the memory footprint, they would still need to keep the activations for every ray to compute the per image losses.

- Love how the paper brings together a bunch of ideas from unrelated papers into one cohesive narrative

- The results are far from perfect, but it is nevertheless a great start.

- Without GAN loss the model produces results that are blurrier, without pseudo ground truth images - less realistic, and without the two phase rendering - lacking in fine detail

🔗 Links:

GANcraft arxiv / GANcraft github

👋 Thanks for reading!

Join Patreon for Exclusive Perks!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Discuss the paper

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!