38: ViTGAN Explained

ViTGAN: Training GANs with Vision Transformers by Kwonjoon Lee et al. explained in 5 minutes or less.

⭐️Paper difficulty: 🌕🌕🌕🌑🌑

🎯 At a glance:

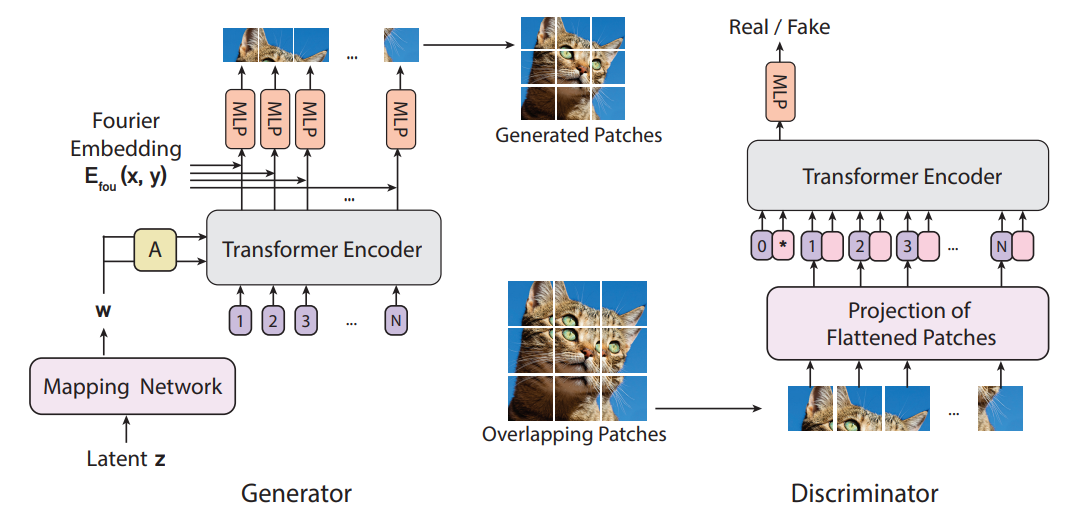

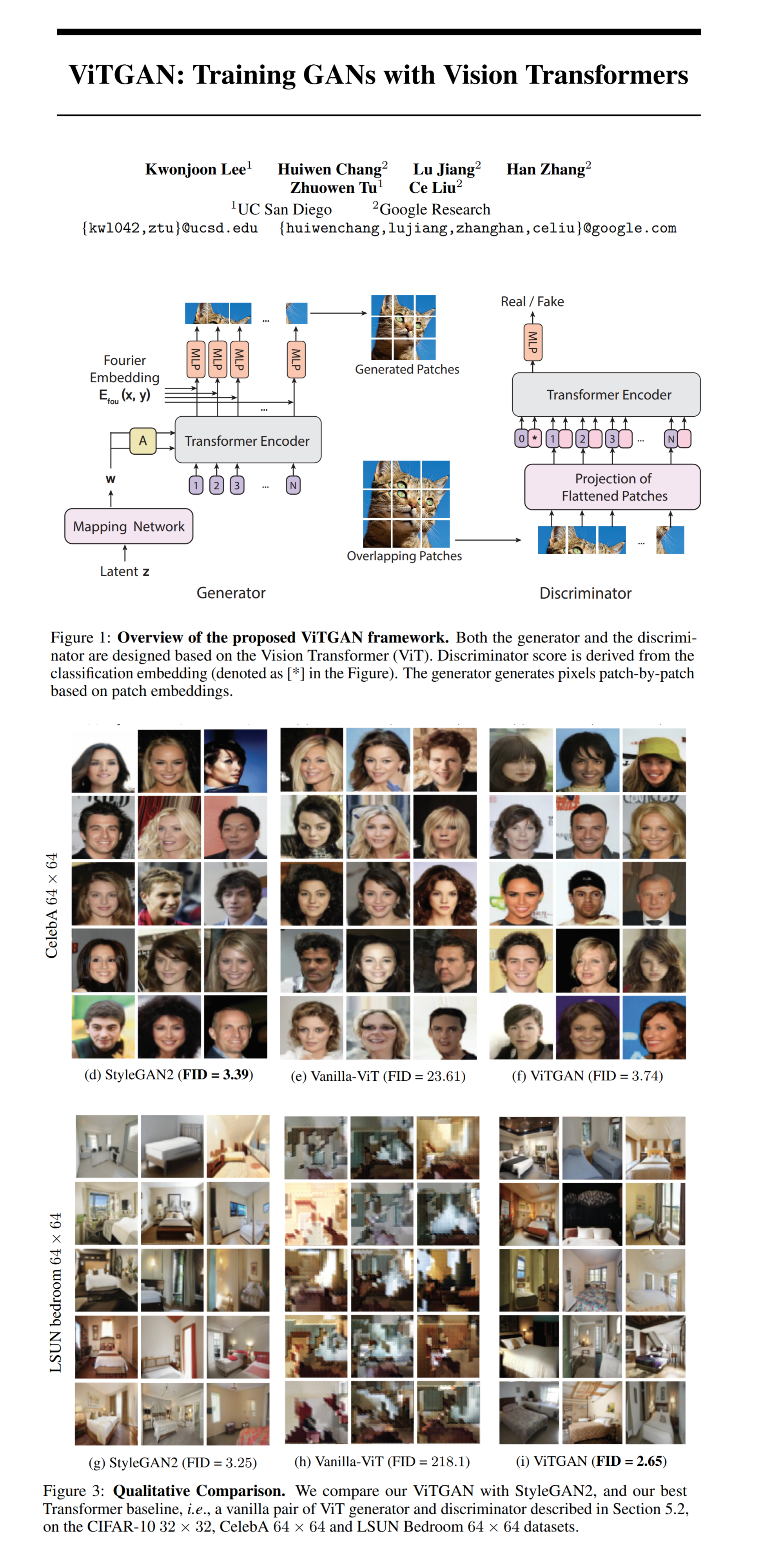

Transformers… Everywhere I look I see transformers (not the Michael Bay kind thankfully 💥). It is only logical that eventually they would make their way into the magical world of GANs! Kwonjoon Lee and colleagues from UC San Diego and Google Research combined ViT - a popular vision transformer model based on patch tokens that is typically used in classification tasks with the GAN framework to create ViTGAN - a GAN with self-attention and new regularization techniques that overcome the unstable adversarial training of Vision Transformers. ViTGAN achieves comparable performance to StyleGAN2 on a number of datasets, albeit at a tiny 64x64 resolution.

⌛️ Prerequisites:

(Highly recommended reading to understand the core contributions of this paper):

1) Spectral Normalization

2) ViT

3) CIPS

🔍 Main Ideas:

1) Regularizing ViT discriminator: A recent study showed that the dot product self-attention breaks Lipshitz continuity that ensures the existence of an optimal discriminative function. To enforce Lipschitz continuity in a ViT discriminator the authors use L2 attention: the dot product similarity is replaced with Euclidean distance and the weights for the projection matrices for query and key are tied.

2) Improving spectral normalization: Standard spectral normalization as well as R1 gradient penalty slows the training of GANs with Transformer blocks due to their sensitivity to the scale of Lipschitz constant. A simple fix is multiplying the normalized weight matrix of each layer with the spectral norm at initialization which increases the spectral norm of the projection matrix

3) Overlapping Image Patches: To prevent the discriminator from overfitting and memorizing the local cues from regular non-overlapping patches the authors allow the patches to slightly overlap. They suggest it may give the Transformer a better sense of locality and less sensitivity to the predefined grids.

4) Generator design: An important point to consider when designing the transformer generator is the use of the latent variable that the synthesized images are conditioned on. Specifically, how the style vector is used to modulate the intermediate representations in the generator. The authors considered using the baseline ViT where the style vector is appended in various places to the tokens but decided to use self-modulated attention instead. It is basically an AdaIN layer that uses the style vector to perform an affine transform on an embedding instead of a feature map.

5) Implicit Neural Representation: ViTGAN uses a similar setup to CIPS for implicit representations. Concretely, each patch is generated by a 2 layer MLP from a grid of Fourier feature positional encoding concatenated with the embedding vector for the synthesized patch.

📈 Experiment insights / Key takeaways:

- ViT discriminator and generators use 4 blocks for 32x32 and 6 blocks for 64x64

- The considered baselines are StyleGAN2, ViT base (a configuration without a self-modulation layer), and TransGAN-XL (Note: either I am blind or there is no comparison with TransGAN anywhere in the paper 🤨) and outperforms them at 32 and 64 resolution

- Seems that it still falls short of beating the best CNN-based GAN models

🖼️ Paper Poster:

✏️My Notes:

- (3/5) Right, ViTGAN is very much to the point, nothing more to say here

- I don’t know guys, this seems like a poor man’s GANsformer 😅

- Oh, TransGAN is mentioned. I was planning to cover it next, but it is said that the results are worse, hm.

🔗 Links:

ViTGAN arxiv / ViTGAN github unofficial

👋 Thanks for reading!

If you found this paper digest useful, subscribe and share the post with your friends and colleagues to support Casual GAN Papers!

Join the Casual GAN Papers telegram channel to stay up to date with new AI Papers!

Join Patreon for Exclusive Perks!

By: @casual_gan

P.S. Send me paper suggestions for future posts @KirillDemochkin!